- Products

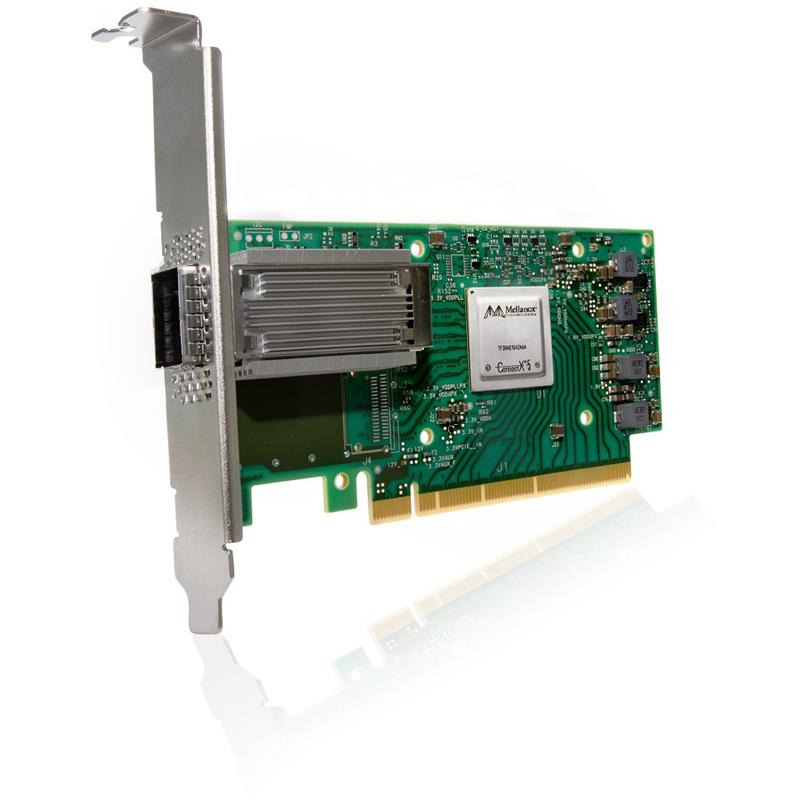

- Mellanox MCX555A-ECAT ConnectX-5 VPI EDR/100GbE Adapter Plug-in Card - Gen 3 PCIe x16 Single-Port QSFP28 Connectors Optical Fiber

Mellanox MCX555A-ECAT ConnectX-5 VPI EDR/100GbE Adapter Plug-in Card - Gen 3 PCIe x16 Single-Port QSFP28 Connectors Optical Fiber

Tag Matching and Rendezvous Offloads

Adaptive Routing on Reliable Transport

NVMe over Fabric Offloads NVMe Device

Back-End Switch Elimination by Host Chaining

EDR/100GbE Ethernet Adapter ConnectX-5 Supports Optical Fiber Cable To Span Longer Distances And Provides Data Transmission Rates Par Excellence Between Servers And Network Components. Gen 3.0 PCI Express x16 Connectivity. Provide Single-port QSFP28, Optical Fiber, Plug-in Card, Network Technology: 100GBase-X - (MCX555A-ECAT)

ConnectX-5 delivers high bandwidth, low latency, and high computation efficiency for high performance, data-intensive and scalable compute and storage platforms. ConnectX-5 offers enhancements to HPC infrastructures by providing MPI and SHMEM/PGAS and Rendezvous Tag Matching offload, hardware support for out-of-order RDMA Write and Read operations, as well as additional Network Atomic and PCIe Atomic operations support.

ConnectX-5 VPI utilizes both IBTA RDMA (Remote Data Memory Access) and RoCE (RDMA over Converged Ethernet) technologies, delivering low-latency and high performance. ConnectX-5 enhances RDMA network capabilities by completing the Switch Adaptive-Routing capabilities and supporting data delivered out-of-order, while maintaining ordered completion semantics, providing multipath reliability and efficient support for all network topologies including DragonFly and DragonFly+.

ConnectX-5 also supports Burst Buffer offload for background checkpointing without interfering in the main CPU operations, and the innovative transport service Dynamic Connected Transport (DCT) to ensure extreme scalability for compute and storage systems.

Features

Tag Matching and Rendezvous Offloads

Adaptive Routing on Reliable Transport

Burst Buffer Offloads for Background Checkpointing

NVMe over Fabric (NVMf) Offloads NVMe Device

Back-End Switch Elimination by Host Chaining

Embedded PCIe Switch

Enhanced vSwitch/vRouter Offloads

Flexible Pipeline

RoCE for Overlay Networks

PCIe Gen 4 Support

Extra Specifications for Mellanox MCX555A-ECAT ConnectX-5 VPI EDR/100GbE Adapter Plug-in Card - Gen 3 PCIe x16 Single-Port QSFP28 Connectors Optical Fiber

| Main Specifications | ||

| Device Type | 100Gigabit Ethernet Card | |

| Form Factor | Plug-in Card | |

| Interface (Bus) Type | PCI Express 3.0 x16 | |

| Dimensions (WxDxH) | Width 2.7in x Depth 5.6in | |

| InfiniBand | EDR / FDR / QDR / DDR / SDR, IBTA Specification 1.3 compliant, RDMA, Send/Receive semantics, Hardware-based congestion control, Atomic operations, 16 million I/O channels, 256 to 4Kbyte MTU, 2Gbyte messages, 8 virtual lanes + VL15 | |

| CPU Offloads | RDMA over Converged Ethernet (RoCE), TCP/UDP/IP stateless offload, LSO, LRO, checksum offload, RSS (also on encapsulated packet), TSS, HDS, VLAN and MPLS tag insertion/stripping, Receive flow steering, Data Plane Development Kit (DPDK) for kernel bypass applications, Open vSwitch (OVS) offload using ASAP2: Flexible match-action flow tables, Tunneling encapsulation/de-capsulation, Intelligent interrupt coalescence, Header rewrite supporting hardware offload of NAT router | |

| Connectivity | ||

| Connectors | Single-port QSFP28 | |

| Networking | ||

| Ethernet | 100GbE / 50GbE / 40GbE / 25GbE / 10GbE / 1GbE, IEEE 802.3bj, 802.3bm 100 Gigabit Ethernet, IEEE 802.3by, Ethernet Consortium 25, 50 Gigabit, Ethernet, supporting all FEC modes, IEEE 802.3ba 40 Gigabit Ethernet, IEEE 802.3ae 10 Gigabit Ethernet, IEEE 802.3az Energy Efficient Ethernet (fast wake), IEEE 802.3ap based auto-negotiation and KR startup, IEEE 802.3ad, 802.1AX Link Aggregation, IEEE 802.1Q, 802.1P VLAN tags and priority, IEEE 802.1Qau (QCN) Congestion Notification, IEEE 802.1Qaz (ETS), IEEE 802.1Qbb (PFC), IEEE 802.1Qbg, IEEE 1588v2, Jumbo frame support (9.6KB) | |

| Enhanced Features | Hardware-based reliable transport, Collective operations offloads, Vector collective operations offloads, Mellanox PeerDirect RDMA (aka GPUDirect) communication acceleration, 64/66 encoding, Extended Reliable Connected transport (XRC), Dynamically Connected transport (DCT), Enhanced Atomic operations, Advanced memory mapping support, allowing user mode registration and remapping of memory (UMR), On Demand Paging (ODP), MPI Tag Matching, Rendezvous protocol offload, Out-of-order RDMA supporting Adaptive Routing, Burst buffer offload, In-Network Memory registration-free RDMA memory access | |

| Storage Offloads | NVMe over Fabric offloads for target machine, T10 DIF Signature handover operation at wire speed, for ingress and egress traffic, Storage protocols: SRP, iSER, NFS RDMA, SMB Direct, NVMe-oF | |

| Warranty: | 3 years |

| Coverage: | Replacement or repair |

| Average Service Time: | 1-2 weeks |

| E-mail: | returnservices@wiredzone.com |

| If you need Technical Support for this item please contact: | |

| Provider: | Mellanox |

| Type of Support: | FULL |

| Phone No.: | 408-916-0055 |

| URL: | Click here for Manufacturer Website |